Algorithm Example: Deep TAMER

A natural way for humans to guide an agent's learning is to observe its inputs and provide feedback on its actions. This translates directly to incorporating human feedback into reinforcement learning by assigning human feedback as state-action value. Deep TAMER is a prominent human-guided RL framework that leverages this concept by enabling humans to offer discrete, time-stepped positive or negative feedback. To account for human reaction time, a credit assignment mechanism maps feedback to a window of state-action pairs. A neural network \(F\) is trained to estimate the human feedback value, denoted as \(\hat{f}_{s,a}\), for a given state-action pair \((s, a)\). The policy then selects the action that maximizes this predicted feedback.

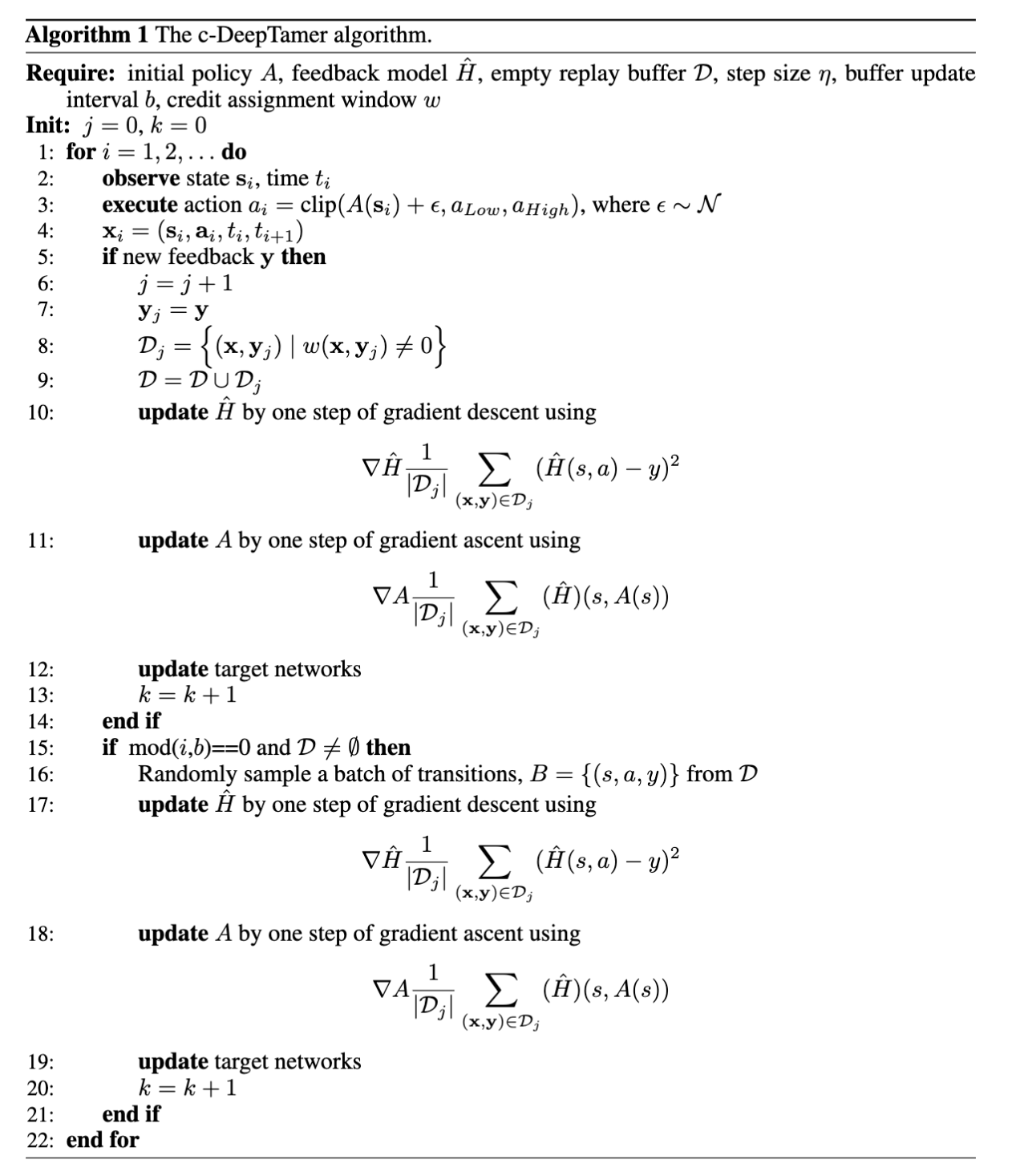

The algorithm example we provide here is enhances the original Deep TAMER in numerous ways. First, the original Deep TAMER formulation relies on DQN, which only works with discrete action spaces. We designed a continuous version of Deep TAMER while adopting state-of-the-art reinforcement learning implementation practices. We implement an actor-critic framework to handle continuous action space. Here, the critic is the human feedback estimator \(F(s, a)\), directly estimating human feedback instead of a target action value. The actor is parameterized by a neural network \(A(s) = a\), aiming to maximize the critic's output. The combined objective is defined as: \begin{equation} \mathcal{L_{\text{c-deeptamer}}} = ||F(s,A(s)) - f_{s,A(s)}||_2 - F(s, A(s)) \end{equation} We follow recent advancements in neural architectures and hyperparameter designs. Our strong baseline not only enhances Deep TAMER to continuous actions and recent RL practices but also maintains the core methodology of integrating real-time human feedback into the learning process.